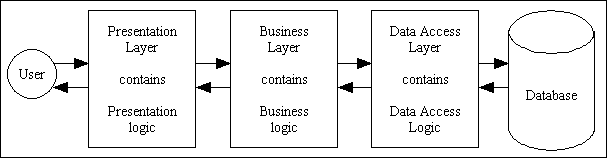

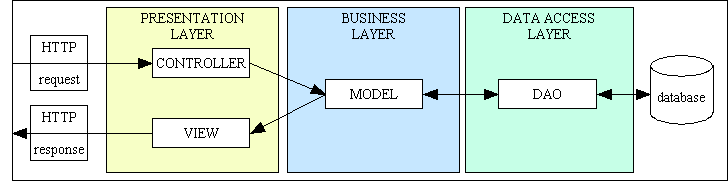

Figure 1 - The 3-Tier Architecture

I have been a computer programmer for several decades during which I have worked with many companies, many teams, more than a few programming languages, a variety of hardware (mainframes, mini-computers and micro-computers), several file systems (flat files, indexed files, hierarchical databases, network databases and relational databases), many projects, many development standards, and several programming paradigms (procedural (COBOL), component based (UNIFACE) and object oriented (PHP). I have worked with punched card, paper tape, teletypes, dumb terminals (green screens) working in both character mode and block mode, and Personal Computers. I have written applications which run on the desktop as well as the internet. I have progressed from writing programs from other people's designs and specifications to designing and building entire applications which I either build myself or as the lead developer in a small team. I have also designed and built 3 frameworks in 3 different languages to aid in the development process.

Ever since I started programming with the Object Oriented paradigm I have noticed a disturbing trend in the amount of code which is supposed to be written in order to achieve a result. This is, in my humble opinion, a direct result of the purely dogmatic approach where a small group of self-styled "experts" try to enforce their personal programming style and artificial rules on everybody else in a totally dictatorial manner. In this article I will attempt to explain why I much prefer a more pragmatic approach in which the aim is to produce a satisfactory result with as little effort as possible.

It is my feeling that too many of today's programmers have forgotten the original and fundamental principles of computer programming and spend too much time in dreaming up new levels of complexity in order to make themselves appear more clever, more cool, and more acceptable to those whom they regard as their peers. It is my humble opinion that these extra levels of complexity are introduced by people who are trying to mask their underlying lack of programming skill. By making something appear to be more complex than it really is they are demonstrating a complete lack of understanding of both the problem and the solution.

In this article I will show why I choose to ignore all these unnecessary complexities yet am still able to produce effective results. I prefer to stick to old school principles and I don't embrace any new ideas unless I can see the benefit of doing so. My starting point is a simple fact which a lot of people seem to be unaware:

The primary function of a software developer is to develop cost-effective software for the benefit of the end user, not to impress other developers with the supposed cleverness of their code.

I am not alone in this opinion. In his blog post The narrow path between best practices and over-engineering Juri Strumpflohner writes:

What many software engineers often forget, is what we are here for after all: to serve the customer's need; to automate complex operations and to facilitate, not complicate, his life. Obviously we are the technicians and we therefore need to do our best to avoid technical deadlocks, cost explosions etc. But we are not here to create the architectures of our dreams. Too often I have the feeling that we would be better served to invest our time in more intuitive, damn simple user interfaces rather than complex back end architectures.

Writing software that does not solve the customer's problem in a cost-effective manner, and which cannot be understood and therefore maintained by developers of lesser ability, is a big mistake, and it does not matter that you and your fellow developers wax lyrical over the brilliant architecture, the number of classes, the number of design patterns, the number of layers of indirection, or the number of clever, fancy or esoteric features and functions that you have crammed into your design or your code.

Another simple fact which I learnt early on in my career and which I strive to follow at every possible opportunity is:

The best solution is almost always the simplest solution.

A truly skilled engineer will always strive to produce a solution which is simple and elegant while others can do no more than produce eccentric machines in the style of Heath Robinson or Rube Goldberg. Too many people look at a task which is well-established yet manual (simple) and assume that it needs to be updated into something more modern and automated (complex) with all the latest go-faster-stripes with bells-and-whistles. They become so obsessed with making it "modern" they lose sight of the fact that their "improvements" make their solution more complicated than the original problem. A prime example of this is the automatic light bulb changer which is an ugly monstrosity with over 200 parts. Hands up those who would prefer to stick to the "old fashioned" way of changing a light bulb? (1) Unscrew old bulb, (2) Screw in replacement bulb.

Many wise people had said many things about the idea that "simple is best", some of which are documented in Quotations in support of Simplicity.

The approach which I have learned to follow throughout my long career is based on the idea that the best solution is the simplest solution as well as the smallest solution. This philosophy is known as "minimalism" as it fits this description from wikipedia:

Minimalism is any design or style wherein the simplest and fewest elements are used to create the maximum effect.

In his book "Minimum" John Pawson describes it thus:

The minimum could be defined as the perfection that an artefact achieves when it is no longer possible to improve it by subtraction. This is the quality that an object has when every component, every detail, and every junction has been reduced or condensed to the essentials. It is the result of the omission of the inessentials.

Russ Miles, in his talk Without Simplicity, There Is no Agility, provided the following description:

Simplicity is reduction to the point that any further reduction will remove important value.

Minimalism is not a concept which is confined to just art and architecture, it can be applied to any area which involves design, whether it be buildings, cars or software. Anything which can be designed can be over-designed into something which is over-complicated. The trick is to spot those complications which are not actually necessary, and remove them. Unfortunately it seems that few people have the ability to look at an area of complexity and decide that it is more complex than it could be. They are told by others that it has to be that complex because there is no other way.

So there it is is a nutshell - include what is necessary and exclude what is unnecessary. If you can remove something and your program still works, then what you removed was effectively useless and redundant. In the remainder of this document I will highlight those specific areas where I cut out the crap excess fat and produce code which is lean and mean.

Which is best - simplicity or complexity? How much code should write - little or lots? Here are some quotations from various famous or not-so-famous people:

Simplicity is the soul of efficiency.

All that is complex is not useful. All that is useful is simple.

Everything should be made as simple as possible, but not simpler.

Some people seem to have difficulty understanding what the phrase "but not simpler" actually means. If something is too simple then it won't actually work, so you should only add complexity in small increments until you have something that works, and then you stop adding.

To arrive at the simple is difficult.

The unavoidable price of reliability is simplicity.

Simplicity is prerequisite for reliability.

Simplicity does not precede complexity, but follows it.

Technical skill is mastery of complexity, while creativity is mastery of simplicity.

Complexity means distracted effort. Simplicity means focused effort.

A complex system that works is invariably found to have evolved from a simple system that works.

There are two ways of constructing a software design. One way is to make it so simple that there are obviously no deficiencies. And the other way is to make it so complicated that there are no obvious deficiencies.

...Simplifications have had a much greater long-range scientific impact than individual feats of ingenuity. The opportunity for simplification is very encouraging, because in all examples that come to mind the simple and elegant systems tend to be easier and faster to design and get right, more efficient in execution, and much more reliable than the more contrived contraptions that have to be debugged into some degree of acceptability.... Simplicity and elegance are unpopular because they require hard work and discipline to achieve and education to be appreciated.

Less is more.

Remember that there is no code faster than no code.

The cheapest, fastest, and most reliable components of a computer system are those that aren't there.

The ability to simplify means to eliminate the unnecessary so that the necessary may speak.

Perfection (in design) is achieved not when there is nothing more to add, but rather when there is nothing more to take away.

I have yet to see any problem, however complicated, which, when you looked at it in the right way, did not become still more complicated.

Smart data structures and dumb code works a lot better than the other way around.

[...] the purpose of abstraction is not to be vague, but to create a new semantic level in which one can be absolutely precise.

... the cost of adding a feature isn't just the time it takes to code it. The cost also includes the addition of an obstacle to future expansion. ... The trick is to pick the features that don't fight each other.

One of the most dangerous (and evil) things ever injected into the project world is the notion of process maturity. Process maturity is for replicable manufacturing contexts. Projects are one-time shots. Replicability is never the primary issue on one-time shots. More evil than good has come from the notion that we should "stick to the methodology." This is a recipe for non-adaptive death. I'd rather die by commission.

Fools ignore complexity; pragmatists suffer it; experts avoid it; geniuses remove it.

Any intelligent fool can make things bigger, more complex, and more violent. It takes a touch of genius - and a lot of courage - to move in the opposite direction.

Controlling complexity is the essence of computer programming.

Complexity is a sign of technical immaturity. Simplicity of use is the real sign of a well design product whether it is an ATM or a Patriot missile.

Complexity kills. It sucks the life out of developers, it makes products difficult to plan, build and test, it introduces security challenges and it causes end-user and administrator frustration. ...[we should] explore and embrace techniques to reduce complexity.

The inherent complexity of a software system is related to the problem it is trying to solve. The actual complexity is related to the size and structure of the software system as actually built. The difference is a measure of the inability to match the solution to the problem.

Increasingly, people seem to misinterpret complexity as sophistication, which is baffling --- the incomprehensible should cause suspicion rather than admiration. Possibly this trend results from a mistaken belief that using a somewhat mysterious device confers an aura of power on the user.

That simplicity is the ultimate sophistication. What we meant by that was when you start looking at a problem and it seems really simple with all these simple solutions, you don't really understand the complexity of the problem. And your solutions are way too oversimplified, and they don't work. Then you get into the problem, and you see it's really complicated. And you come up with all these convoluted solutions. That's sort of the middle, and that's where most people stop, and the solutions tend to work for a while. But the really great person will keep on going and find, sort of, the key, underlying principle of the problem. And come up with a beautiful elegant solution that works.

If you cannot grok the overall structure of a program while taking a shower, you are not ready to code it.

Beauty is more important in computing than anywhere else in technology because software is so complicated. Beauty is the ultimate defense against complexity.

When I am working on a problem, I never think about beauty. I think only of how to solve the problem. But when I have finished, if the solution is not beautiful, I know it is wrong.

So the general consensus here is that if you have a choice between a complex solution and a simple solution the wisest person will always go for the simplest solution. The unwise will go for the complex solution in the belief that it will impress others in positions of influence. They think it makes them look "clever", when in reality it is "too clever by half". This is called over-engineering and if often caused by feature creep. The other choice is to go for only enough code that will actually do the job and not to dress it up with unnecessary frills, frivolities or flamboyancies. This also means using as few classes and design patterns as is necessary and not as many as is possible.

If you do not like the idea that "less is better" then consider the fact that more than a few programmers have noticed that OO code runs slower than non-OO code. This is not because the use of classes and objects is slower per se, the actual culprit is the sheer volume of extra and unnecessary code. If you try using just enough classes and just enough code to get the job done then you will see timings that aren't so bloated.

The journey from novice to experienced programmer is not something which can be achieved overnight. It is not just a simple matter of learning a list of principles, it is how you put those principles into practice which really counts. Someone may be able to learn the wording of these principles and repeat them back to you parrot fashion, but without the ability to put those words into action the results will always be unsatisfactory. This journey can be simplified into the following stages:

To work or be Effective is defined as:

adequate to accomplish a purpose; producing the intended or expected result.

Clearly a program that produces the wrong result, or produces a result that nobody wants, or takes too long to produce its result is going to have very little value.

Efficient is defined as:

The accomplishment of or ability to accomplish a job with a minimum expenditure of time and effort.

There are actually several areas where efficiency can be measured in a computer program:

Maintainable is defined as:

The ease with which a software system or component can be modified to correct faults, improve performance or other attributes, or adapt to a changed environment.

It is extremely rare for a program to be written, then be run for a long period of time without someone, who may or may not be the original author, examining the code in order to see how it does what it does so that either a bug can be fixed or a change can be made. Code which cannot be maintained is a liability, not an asset. If it cannot easily be fixed or enhanced then it can only be replaced, and code which has to be replaced has no value, regardless of how well it was supposedly written.

It is therefore vitally important that those who have proven their skill in the art of software development can pass on their knowledge to the next generation. However, there are too many out there who think they are skilled for no other reason than they know all the latest buzzwords and fashionable techniques. These people can "talk the talk", but they cannot "walk the walk". They may know lots of words and lots of theories, but they lack the ability to translate those words into simple, effective architectures and simple, effective and maintainable code.

Some people equate "maintainable" as adhering to a particular set of detailed standards or "best practices". Unfortunately a large percentage of these standards go beyond what is absolutely necessary and are filled with too much trivial detail and waste time on nit-picking irrelevances. This theme is explored further in Best Practices, not Excess Practices.

An apprentice cannot become a master simply by being taught, he has to learn. Learning comes from experience, from trial and error. Here is a good description of experience:

Experience helps to prevent you from making mistakes. You gain experience by making mistakes.

In his article Write code that is easy to delete, not easy to extend the author describes experience as follows:

Becoming a professional software developer is accumulating a back-catalogue of regrets and mistakes. You learn nothing from success. It is not that you know what good code looks like, but the scars of bad code are fresh in your mind.

Despite what people say it is impossible to produce "perfect" software, the primary reason being that nobody can define exactly what "perfect" actually means. However, if you ask a group of developers to define "imperfect" software the only consistent phrase you will hear is "software not written by me" or possibly "software not written to standards approved by me".

All software is a compromise between several factors - what the user wants, when they want it, and how much they are prepared to pay for it. The quality of a solution often depends on how many resources you throw at it, so if the resources are limited then you have no choice but to do the best you can within those limitations.

Another factor that should be taken into consideration when calculating the cost of a piece of software is the cost of the hardware on which it will run. A piece of software which is 10% cheaper than its nearest rival may not actually be that attractive if it requires hardware which is 200% more expensive.

All these factors - cost of development, speed of development, hardware costs, effectiveness of the solution - must be weighed in the balance and be the subject of a Cost-Benefit Analysis in order to determine how to get the most bang for your buck. Spending 1 penny to save £1 would be a good investment, but spending £1 to save a penny would not. If the cost of a mathematical program doubles for each decimal place of precision then instead of asking "How many decimal places do I want?" the customer should ask the question "How many decimal places can I afford?" as well as "How many decimal places do I need?" If a GPS system which can pinpoint your position to within 25 feet of accuracy costs £100, but one which gives 1 foot of accuracy costs £1,000 then the level of precision which you can expect is limited by your budget and not the skill of the person who built the device.

The cost of a piece of software should be gauged against its value or how much money it will save over a period of time. This is sometimes known as Return On Investment (ROI). If a piece of software can reduce costs to the extent that it can pay for itself in a short space of time, then after that time has elapsed you will be in profit and this will be considered to be a good investment. However, if this time period stretches into decades then the ROI will be less attractive. The savings which a piece of software may produce may be classified as follows:

Another point to keep in mind about being cost effective is to do enough work to complete the task and then stop. Clayton Neff pointed out in his article When is Enough, Enough? that there are some programmers out there who always think that what they have produced is not quite perfect, and all they need to do is tidy it up a bit here, refactor it a bit there, add in another design pattern here, tweak it a bit there, and only then will it be perfect. Unfortunately perfection is difficult to achieve because it means different things to different people, so what seems perfect to one programmer may seem putrid to another. What seems perfect today may seem putrid tomorrow. Refactoring the code may not actually make it better, just different, and unless you are actually fixing a bug or adding in a new feature you should not be working on the code at all. It is not unknown for a simple piece of unwarranted refactoring to actually introduce bugs instead of improvements, so if it ain't broke don't fix it.

In my long career I have encountered numerous people whose basic understanding of the art of software development is simply wrong, and if you can't get the basics right then you are building on shaky foundations and creating a disaster that is waiting to happen. Some of these misunderstandings are described in the following sections.

Although computer programmers like to call themselves "software engineers" nowadays, a lot of them are nothing more than "fitters" or "apprentice engineers". So what's the difference?

A fitter is to an engineer as a monkey is to an organ grinder.

If a programmer is incapable of writing a program without the aid of someone else's framework, then he's not an engineer, he's a fitter.

If a programmer is incapable of building his own framework, then he's not an engineer, he's a fitter.

If a programmer is incapable of writing software without the use of a collection of third-party libraries, then he's not an engineer, he's a fitter.

If a programmer finds a fault in a third-party library, but is unable to find the fault and fix it himself, then he's not an engineer, he's a fitter.

If a programmer can only work from other people's designs, then he's not an master engineer, he's an apprentice.

If a programmer can only design small parts of an application and not an entire application, then he's not an engineer, he's an apprentice.

The term dogmatic is defined as follows:

Asserting opinions in a doctrinaire or arrogant manner; opinionated - dictionary.com

Characterized by an authoritative, arrogant assertion of unproved or unprovable principles - thefreedictionary.com

(of a statement, opinion, etc) forcibly asserted as if authoritative and unchallengeable - collinsdictionary.com

The term pragmatic is defined as follows:

Action or policy dictated by consideration of the immediate practical consequences rather than by theory or dogma - dictionary.com

The term heretic is defined as follows:

anyone who does not conform to an established attitude, doctrine, or principle - dictionary.com

In any sphere of human endeavour you will find that someone identifies a target or goal to be reached (such as developing better software through OOP) and then identifies a set of guidelines to help others reach the same goal. Unfortunately other people come along and interpret these "guidelines" as being "rules" which must be followed at all times and without question. Yet more people come along and offer different interpretations of these rules, and some go as far as inventing completely new rules which other people then reinterpret into something else entirely. And so on ad nauseam ad infinitum. You end up with the situation where you cannot see the target because it is buried under mountains of rules. The dogmatist will follow the rules blindly and assume that he will hit the target eventually. The pragmatist will identify the target, and ignore all those rules which he sees as nothing but obstructions or impediments. The pragmatist will therefore reach the target sooner and with less effort.

Working with programmers of different skill levels but who are willing to learn is one thing, but trying to deal with those whose ideas run contrary to your own personal (and in my case, extensive) experience can sometimes be exasperating, especially when they try to enforce those ideas onto you in a purely dogmatic and dictatorial fashion. They seem to think that their rules, methodologies and "best practices" are absolutely sacrosanct and must be followed by the masses to the letter without question and without dissension. They criticise severely anyone who dares to challenge their beliefs, and anyone who dares to try anything different, or deviate from the authorised path, is branded as a heretic. As a pragmatic programmer I don't follow rules blindly and assume that the results must be acceptable, I concentrate on achieving the best result possible with the resources available to me, and I will adopt, adapt or discard any methodologies, practices or artificial/personal rules as I see fit. This may upset the dogmatists, but I'm afraid that their opinions are less relevant than those of the end user, the paying customer.

This attitude that everyone must follow a single set of rules, regardless of the consequences, just to conform to someone's idea of "purity" is not, in my humble opinion, having the effect of increasing the pool of quality programmers. Instead it is achieving the opposite - by stifling dissent, by stifling experimentation with anything other then the "approved" way, it is leading an entire generation of programmers down the wrong path.

Progress comes from innovation, not stagnation. Innovation comes from trying something different. If you are not allowed to try anything different then how can it be possible to make progress?

This means that the pragmatic programmer, by not following the rules laid down by the dogmatists, is automatically branded a heretic.

This also means that the dogmatic programmer, by disallowing experimentation and innovation, is impeding progress by perpetuating the current state of affairs.

There are two types of person in this world:

Dogmatists fall into the first category. They only know what they have been taught and never bother trying to learn anything for themselves. They never investigate if there are any alternative methods which may produce better or even comparable results. They have closed minds and do not like their beliefs being questioned. They are taught one method and blindly believe that it is the only method that should be followed. They automatically consider different methods as wrong even though they may achieve the exact same result - that of effective software. They refuse to consider or even discuss the merits of alternative methodologies and dismiss these alternative thinkers as either non-believers who should be ignored, or heretics who should be burned at the stake. They are taught a method or technique which may have benefits under certain circumstances and blindly use that method or technique under all circumstances without checking that they actually have the problem for which the solution was designed to solve. The indiscriminate use of a method or technique shows a distinct lack of thought regarding the problem, and if the problem has not been thought out thoroughly then how can you be confident that the solution actually solves the problem in the most effective manner, or even solves it at all? If you ask a dogmatist why a particular rule, methodology or technique should be followed he will simply say "Because it is the way it is done!" but he will not be able to explain why it is better than any of the alternatives.

Pragmatists do not accept anything at face value. They have open minds and will want to question everything. They listen to what they have been taught and use this as the first step, not the only step, in the journey for knowledge. If they hear about a new technique, approach or methodology they will examine it and perhaps play with it to see if the hype is actually justified. If they come across anything which has merit, even in limited circumstances, then they will use that new technique in preference to something older and more established. A pragmatist has the experience to know whether a new technique offers a better solution or merely a more complicated solution, and will always favor the simple over the complex even when others around him proclaim "this is the way of the future". He is prepared to experiment, adapt to changing circumstances and adopt new techniques while others stick to their "one true way". He will not jump on the bandwagon and adopt a technique just because it is the buzzword of the day - he will consider the merits, and if they fall short he will ignore it even in the face of criticism from others. A pragmatist has the ability to see through the hype and get to the heart of the matter.

Here are some differences between being taught and learning:

Remember that software development is a fast-changing world, so today's best practice may be tomorrow's impediment. Someone who is not prepared to try or even discuss alternative techniques may find themselves clinging to the old ways long after everyone else has moved on and left them far behind.

A great number of people fail to realise the fact that computer programming is an art, not a science. So what is the difference?

For example, take a battery, two wires and a container of water. Connect one end of each wire to the battery and place the other end in the water. Bubbles of hydrogen gas will appear on the negative wire (cathode) while bubbles of oxygen will appear on the positive wire (anode). This scientific experiment can be duplicated by any child in any school laboratory with exactly the same result. Guaranteed. Every time.

Art, on the other hand, is all about talent. It cannot be taught - you either have it or you don't. You may have a little talent, or you may have lots of talent. The more talent the artist has then the larger the audience who will likely appreciate his work, and the more value his work will be perceived to have. Those with little or no talent will produce work of little or no value. An artist can take a lump of clay and mould it into something beautiful. A non-artist can take a lump of clay and mould it into - another lump of clay. A skilled sculptor can take a hammer and chisel to a piece of stone and produce a beautiful statue. An unskilled novice can take a hammer and chisel to a large piece of stone and produce a pile of little pieces of stone.

It is not possible to teach an art to someone who does not have the basic talent to begin with. You cannot give a book on how to play the piano to a non-talented individual and expect them to become a concert pianist. You cannot give a book on how to write novels to a non-talented individual and expect them to become a novelist.

There are some disciplines which require a combination of art and science. Take the construction of bridges or buildings, for example: scientific principles are required to ensure the structure will be able to bear the necessary loads without falling down, but artistic talent is required to make it look good. An ugly structure will not win many admirers, and will not enhance the architect's reputation and win repeat business. Nor will a beautiful structure which collapses shortly after being built, A beautiful structure may be maintained for centuries, whereas an ugly one may be demolished within a few years.

Computer programming requires talent - the more talent the better the programmer. A successful programmer does not have to be a member of Mensa or even have a Computer Science degree, but neither should he be a candidate for the laughing academy or funny farm. Although the programmer has to work within the limitations of the underlying hardware and the associated software (operating system, programming language, tool sets, database, et cetera), the biggest limitation by far is his/her own intellect, talent and skill. Writing software can be described as writing an instruction manual for a computer to follow. The computer does not have any intelligence of its own, it is simply a very fast idiot that does exactly what it is told, literally, without question and without deviation. If there is something wrong, missing or vague in those instructions then the eventual outcome may not be as expected. The successful programmer must therefore have a logical and structured mind, with an eye for the finest of details, and understand how the computer will attempt to follow the instructions which it has been given. The problem of trying to teach people how to be effective programmers is just like trying to write an instruction manual for humans on how to write instruction manuals for machines. Remember that only one of those two is supposed to be the idiot.

Although there are experienced developers who can describe certain "principles" or techniques which they follow in order to achieve certain results, these principles cannot be implemented effectively by unskilled workers in a robotic fashion. Although a principle may be followed in spirit, the effectiveness of the actual implementation is down to the skill of the individual developer. In the blog post When are design patterns the problem instead of the solution? T. E. D. wrote the following regarding the (over) use of design patterns:

My problem with patterns is that there seems to be a central lie at the core of the concept: The idea that if you can somehow categorize the code experts write, then anyone can write expert code by just recognizing and mechanically applying the categories. That sounds great to managers, as expert software designers are relatively rare. The problem is that it isn't true.

The truth is that you can't write expert-quality code with "design patterns" any more than you can design your own professional fashion designer-quality clothing using only sewing patterns.

The problem with copying what experts do without understanding what exactly it is they are doing and why leads to a condition known as Cargo Cult Programming or Cargo Cult Software Engineering. Just because you use the same procedures, processes and design patterns that the experts use does not guarantee that your results will be just as good as theirs.

It is also rare for even a relatively simple computer program to rely on the implementation of a single principle as there are usually several different steps that a program has to perform, and each step may involve a different principle. Writing a computer program involves identifying what steps need to be taken and in what sequence, then writing the code to implement each of those steps in that incredible level of detail required by those idiotic machines. There is no routine to be called or piece of code which can be copied in order to implement a programming principle. You cannot tell the computer to "implement the Single Responsibility Principle" as each implementation has to be written by hand using a different set of variables, and anything which has to be written by hand is down to the skill of the person doing the writing. Different programmers can attempt to follow the same set of principles, but because these principles are open to a great deal of interpretation, and sometimes too much interpretation (and therefore mis-interpretation) they are not guaranteed to produce identical results, so where is the science in that?

Among these principles which are vaguely defined and therefore open to misinterpretation, thereby making them of questionable value, I would put the not-so-SOLID principles:

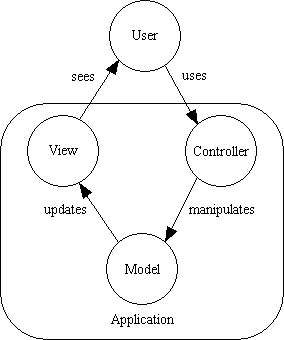

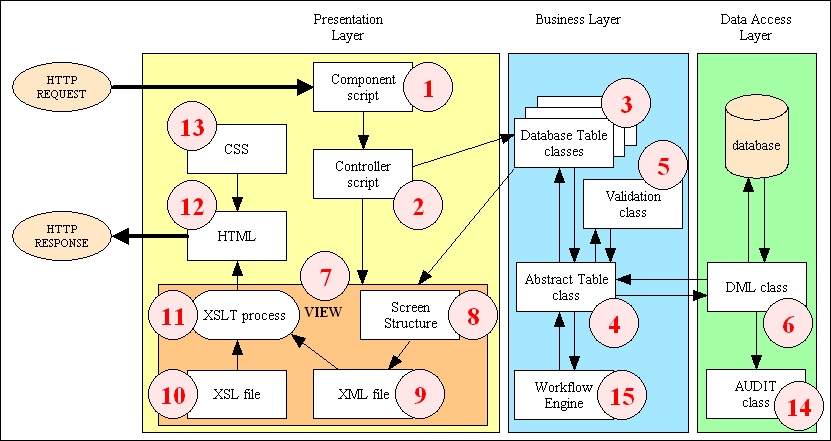

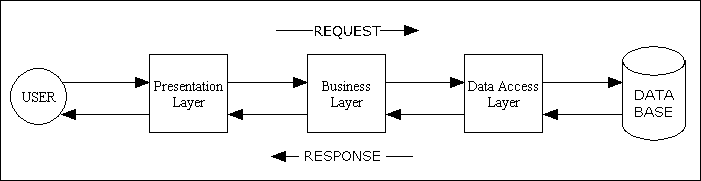

What is a "single responsibility"? How do you know when a component has too many responsibilities and needs to be split into smaller components? How do you know when to stop splitting? Despite Robert C. Martin clearly stating that the three areas of "responsibility" or "concern" which should be separated are GUI logic, business logic and database logic, there are far too many programmers who continue the separation process to ridiculous extremes. My framework is split into 3 separate layers, and that provides all the separation I need, so I see no justification in splitting it further.

This principle does not properly identify what problem(s) it is trying to solve, and as far as I can see all it does is introduce new problems. It is therefore less problematic to ignore this principle completely.

This entire principle is only relevant if you inherit from a concrete class, but in my framework I only inherit from an abstract class.

This principle is only relevant if you use the keywords "interface" and "implements". These keywords are both optional and unnecessary in the PHP language, and as I choose not to use them in my framework this principle is consequently completely irrelevant.

In the first place the common description of this principle, which is "High-level modules should not depend on low-level modules. Both should depend on abstractions. Abstractions should not depend on details. Details should depend on abstractions."

is, in my humble opinion, total garbage. In the second place the author of this principle gives an example in the "Copy" program where its application would be appropriate and provide genuine benefits, but far too many programmers insist on applying this principle in all circumstances whether they are appropriate or not. I have found a place in my framework where I can actually make use of DI, but in those places where it is not appropriate I do not use DI at all.

Although not in SOLID, here is another common principle which I choose to ignore:

This is often stated as "favour composition over inheritance" and is intended to deal with those situations where the over use of inheritance causes problems. As I do not have deep hierarchies, only ever inheriting from a single abstract class, I do not have this problem which means that I don't need this solution.

Some great artists find it difficult to describe their skill in such a way as to allow someone else to produce comparative works. Someone once asked a famous sculptor how it was possible for him to carve such beautiful statues out of a piece of stone. He replied: The statue is already inside the stone. All I have to do is remove the pieces that don't belong.

The great sculptor may describe the tools that he uses and how he holds them to chip away at the stone, but how many of you could follow those "instructions" and produce a work of art instead of a pile of rubble?

If you give the same specification to 10 different programmers and ask them to write the code to implement that specification you will never get results which are absolutely identical, so to expect otherwise would be unrealistic, if not totally foolish. Ask 10 different painters for a picture containing a valley, a tree, a stream, mountains in the background and a cottage in the middle and you will get 10 different paintings, but you could never say that only one of them was "right" while all the others were "wrong". The only way to get identical results would be to use a paint-by-numbers kit, but even though the tasks of the painters would be reduced to that of a robot, all the artistic skill would move to the person designing the kit, and all you you end up with would be multiple copies of the same picture. There is no such thing as a paint-by-numbers kit for software developers, so the quality of the finished work will still rely on the artistic skill of the individual. Besides, multiple copies of the same program is not what you want from your developers.

Some managers seem to think that they can treat their developers as unskilled workers. They seem to think that the analysts and designers do all the creative work, and all you have to do is throw the program specifications at the developers and they will be able to churn out code like unskilled workers on an assembly line.

In his article The Developer/Non-Developer Impedance Mismatch Dustin Marx makes this observation:

Good software development managers recognize that software development can be a highly creative and challenging effort and requires skilled people who take pride in their work. Other not-so-good software managers consider software development to be a technician's job. To them, the software developer is not much more than a typist who speaks a programming language. To these managers, a good enough set of requirements and high-level design should and can be implemented by the lowest paid software developers.

In her article The Art of Programming Erika Heidi says the following:

I see programming as a form of art, but you know: not all artists are the same. As with painters, there are many programmers who only replicate things, never coming up with something original.

Genuine artists are different. They come up with new things, they set new standards for the future, they change the current environment for the better. They are not afraid of critique. The "replicators" will try to let them down, by saying "why creating something new if you can use X or Y"?

Because they are not satisfied with X or Y. Because they want to experiment and try by themselves as a learning tool. Because they want to create, they want to express themselves in code. Because they are just free to do it, even if it's not something big that will change the world.

In his article Mr. Teflon and the Failed Dream of Meritocracy the author Zed Shaw says this:

You can either write software or you can't. [....] Anyone can learn to code, but if you haven't learned to code then it's really not something you can fake. I can find you out by sitting you down and having your write some code while I watch. A faker wouldn't know how to use a text editor, run code, what to type, and other simple basic things. Whether you can do it well is a whole other difficult complex evaluation for an entirely different topic, but the difference between "can code" and "cannot" is easy to spot.

He is saying that being able to write code on it's own does not necessarily turn someone into a programmer. It is the ability to do it well when compared with other programmers that really counts. Anybody can daub coloured oils onto a canvass, but that does not automatically qualify them to be called an artist.

Bruce Eckel makes a profound observation is his article Writing Software is Like ... Writing.

See also "Computer Science" is Not Science and "Software Engineering" is Not Engineering by Findy Services and B. Jacobs.

There is no single community of software developers who use the same language, the same methodologies, the same tool sets, the same standards, who think alike and produce identical works of identical quality. There is instead a mass of individuals with their own levels of artistic and technical skill who, from time to time, may become part of a team, either small or large, within an organisation. The team may strive to work together as a cohesive unit, but each member is still an artist and not a robot who can be pre-programmed to think or function in a standard way. The output of each individual will still be based on his/her individual level of skill and not that of the most skilled member of the team, or even the average level of everyone in the team. A chain is only as strong as its weakest link, and a team is only as strong as its weakest member, so if there are any juniors in the team then it is the responsibility of the seniors to provide training and guidance.

In order for any team to be productive they have to cooperate with each other, and that cooperation can best be achieved by proper discussion and democratic decision making. The wrong way would be for someone to sidestep the democratic process and start imposing rules, disciplines, tool sets or methodologies on the team in a dictatorial or autocratic manner without discussion or agreement. This situation may arise in any of the the following ways:

Technical decisions made by non-technicians should be avoided at all costs as they are invariably the wrong decisions made for the wrong reasons. Every rule should be subject to scrutiny by the team who are expected to follow it, and if found wanting should be rejected. As Petri Kainulainen says in his article We should not enforce decisions that we cannot justify. No developer worth his salt will want to implement an idea that he knows in his heart is wrong. It has been known for developers to quit rather than work in a team that is unable to function effectively due to management interference. All technical decisions, which includes what tools, methodologies and standards to use, should only be made by the technicians within the team who will actually use those tools, methodologies and standards, and only with mutual consent. A group of individuals will find it difficult to work together as a team if they are constantly fighting restrictions and artificial rules imposed on them by bad managers instead of using their skills as software developers.

Enough is just right. More than enough is too much. Too much is not better, it is excessive and wasteful. Those who expend just enough effort to get the job done can be classed as "craftsmen" while those who don't know when to stop can be classed as "cowboys". In software development these cowboys can be so commonplace that they go unnoticed and may even be considered to be the norm.

Are there any professions where cowboys are rare simply because their excesses are deemed to be not only inefficient and unprofessional but even dangerous? One profession that comes to mind is the world of the demolition contractor. These are people who don't blow things up but instead blow them down. They don't make structures explode, they make them implode. They use just enough of the right explosive in the right place to bring the structure down into a neat pile, often with most of the debris falling within the structure's original footprint and the remainder falling within spitting distance. This produces little or no collateral damage to the surrounding area, and leaves a nice pile of debris in one place which can be easily cleaned up. This takes planning, preparation and skill. These men are masters of their craft, they are craftsmen.

A cowboy, on the other hand, is sadly lacking in the planning, preparation and skills department. He doesn't know which explosive is best, or how much to use, or where to put it, so he opts for more than enough (the "brute force" approach) in all the places he can reach. When too much explosive is used, or the wrong explosive is used, or if it's put in the wrong place, the results are always less than optimum. The wrong explosive can sometimes result in the structure being rattled instead of razed. Too much explosive can result in debris being blown large distances and damaging other structures, or perhaps even endangering human life. The cost of the clean-up operation can sometimes be astronomical. The cowboy demolitionist is very quickly spotted and shown the door.

It is easy to spot a cowboy demolitionist as the results of their efforts are clearly visible to everyone. It is less easy to spot a cowboy coder as the results of their efforts come in two parts - how well the code is executed by the computer, and how well the code is understood by the next person who reads it. If that person complains that the code is difficult to understand the author will simply say that the person is not clever enough to understand it. This is wrong - if the average reader cannot understand what has been written then the fault lies with the writer.

In the real world various societies have their own code of conduct which people follow in order to be considered as "good citizens" by their neighbours. While the overall message can be summarised as "do unto others as you would have them do unto you" it goes into finer detail with rules such as "thou shalt not kill" and "thou shalt not steal". Some of these rules may be enshrined in law so that by breaking the law you are treated as a criminal and taken out of civilised society by being sent to prison. Other aspects of personal behaviour may not be criminal offences, but they will not endear you to your fellow citizens and they will seek either to avoid being in your company or to exclude you from theirs.

The trouble is that the simple code of conduct is not good enough for some people, and they have to expand it into something much larger and much more complex. They invent something called "religion" with a particular deity or deities which must be worshipped, with particular methods, places and particular times of worship. They develop special rituals, ceremonies, incantations, festivals, prayers and music. They develop a mythology in order to explain how we came to be, our place in the universe, et cetera, and what happens in the after-life. Lastly they create a class of people (with themselves as members, of course), known as priests or clergy, who are the controllers of this religion, and they expect everyone to worship through them and pay them for the privilege of doing so. These people do not contribute to society by producing anything of value, unlike the working classes, they simply feed off people's fear of the unknown. They claim that it is only by following their particular brand of religion will you have a pleasant after-life. They take some of the original teachings and rephrase them, sometimes with different or even perverse interpretations, which results in the meaning being twisted into something which the original author would not recognise. They try to outdo each other with their adherence to these extreme interpretations to such an extent that they become More Catholic than the Pope. This is how the original idea that "women should dress modestly" is twisted into "women must cover their whole bodies so that only their eyes are visible". Extremists take this further by inflicting cruel tortures and hideous deaths on non-believers so that they can experience maximum unpleasantness before they reach the after-life. They believe that their teachings are sacrosanct and must not be questioned, so if they believe that geocentrism then woe betide anyone who believes that heliocentrism. If they believe in creationism then woe betide anyone who believes in evolutionism/Darwinism.

Following a particular religion does not automatically make you a good citizen:

Similarly in the world of software there is a definition of a "good programmer" with starts with a simple code of conduct - write code that another human being can read and understand. Similarly there are specific rules to help you reach this objective - "use meaningful function names", "use meaningful variable names", "use a logical structure".

The trouble is that the simple code of conduct is not good enough for some people, and they have to expand it into something much larger and much more complex. They produce various documents known as "standards" or "best practices" which go into more and more levels of detail. They decide whether the names should be in snake_case or camelCase, whether to use tabs or spaces for indentation, the placement of braces, which design patterns to use, how they should be implemented, which framework to use, et cetera. They consider themselves to be experts, the "paradigm police", who have the right to tell others what to do. Their approach is "if you want to be one of us then do what you are told, don't question anything, keep your head down, keep your mouth shut, and don't rock the boat". They delight in using more and more complex structures in order to make it seem that you need special powers or skills in order to be a programmer. They are the high priests of OO and everyone else is expected to worship at their altar. They take some of the original teachings and rephrase them, sometimes with different interpretations, which results in the meaning being twisted into something which the original author would not recognise. This is how the original idea that "encapsulation means implementation hiding" is twisted into "information is part of the implementation, therefore encapsulation also means information hiding". Non-believers like myself are treated as heretics and outcasts, but at least these "priests" do not have the power to burn heretics at the stake (although some of them wish that they did).

Following a particular set of best practices does not automatically make you a good programmer:

I have often been complimented on the readability of my code because I put readability, simplicity and common sense above cleverness and complexity, and the fact that I have chosen to ignore all the latest fashionable trends and practices as they have arisen over the years does not make my code any less readable.

A lot of young developers are often told Don't bother trying to re-invent the wheel

meaning that instead of trying to design and build a solution of their own they should save time by using an existing library or framework which has been written by someone else. This can actually be counter-productive as the novice can spend a lot of time searching for possible solutions and then working out which one is the closest fit for his circumstances. This is then followed by the problem of trying to get different libraries written by different people in different styles to then work together in unison to create a whole "something" instead of just a collection of disparate parts. In the article Reinvent the Wheel! it says that the expression is actually invalid as there is no such thing as a perfect wheel, or a one-size-fits-all wheel, so it is only you who can provide the proper solution for your particular set of circumstances. Instead of using a single existing but ill-fitting solution in its entirety you may actually end up by borrowing bits and pieces from several solutions and add a few new bits of your own, but this is a start. Eventually you will use less and less code that others have written and more and more code that you have written.

The practice of doing nothing than assembling components that other people have written is that you never learn to create components yourself. In other words you will always be a fitter and not an engineer. If you are an novice it is perfectly acceptable to look at samples of what other people have done, but eventually you will have to learn to write your own code. As it says in Are You a Copycat? you should eventually stop being a copyist and start being a creator of original works. You need to learn to think for yourself and stop letting other people do your thinking for you, otherwise you could end up as a Cargo Cult Software Engineer who suffers from the Lemming Effect, the Bandwagon Effect or the Monkey See, Monkey Do syndrome.

If everyone in the whole world was an imitator, a copyist, then the world would stagnate. Progress can only be made through innovation, by trying something different, so anyone who tells you Don't bother thinking for yourself, copy what others have done

is actually putting a block on progress and perpetuating the status quo.

Here are a few personal golden rules which I have followed for a long time, and which have always served me well.

A good programmer is one who writes simple code to perform complex tasks, not complex code to perform simple tasks. Simple code is easier to understand than complex code, which also makes it easier to maintain. Writing code which is simple and maintainable should always be preferred over writing code which is clever yet difficult to maintain. To put it another way - any idiot can write code that only a genius can understand, but a true genius can write code that any idiot can understand.

If you have a choice between two possible solutions - a simple one and a complex one - ALWAYS go for the simplest solution. If you don't, then someone who looks at your code and is unable to quickly ascertain how it works is liable to label it as either "magic stuff happens here" or, even worse, "here be dragons!"

This has a more modern variation called Do The Simplest Thing That Could Possibly Work. Some people question the idea of working on something that could only "possibly" work, but they fail to realise that in reality you think of a possible solution, code it and then you test it. If the test fails then you try another idea and test that, and only stop when you have the simplest idea that actually works.

The opposite of KISS is KICK or even LMIMCTIRIJTPHCWA.

If you have written a piece of code and later on you find that you need to use it somewhere else, do not take the easy way out and paste a copy of that code in your second location as when you come to update that code you will find that you have multiple copies to maintain, and then it becomes easy to miss out one of the copies. Your program will then continue to execute the old, worn out code instead of the bright and shiny new code. You should instead put that code into a shared library so that you have a single copy which can be referenced from as many places as you like. After making any changes to that single copy in the shared library you will find that those changes are automatically used in every part of your application.

Turning a block of code into a reusable routine takes skill and effort, but it is a genuine investment. In my career I have known several developers who were too lazy to make the effort, or who couldn't see the point, and they wondered why I didn't rate their skills very highly. In one of my early projects as a junior programmer I was prevented from adding to the routine library simply because the project leader refused to create one. This state of affairs was not rectified until I became leader of my own project and could build my own shared library, after which my library of routines quickly grew until it became a full-blown framework.

However, do not take this idea too far and use it on blocks of code which are less than 5 lines long. Just because your program contains lots of places where a variable is incremented by 1 is no excuse to put that "add 1 to x" into its own routine. Unless the code is particularly complex I view any function, routine or method which has 5 or less lines with deep suspicion.

You should concentrate your efforts on what you actually need today and ignore those things which you think may be useful at some time in the future. When the future eventually arrives it has a habit of looking completely different from how you envisaged it in the past, so all that effort you made was completely wasted. Stick to the requirements as they are known today, and leave the future to the future.

A variation on this theme is Just Sufficient Implementation.

If something works then avoid the temptation to tinker with it just to make it work better as the act of tinkering is liable to break something. Once a program has been completed and signed off you should leave it alone and not touch it again until it becomes the subject of a Change Request. This idea is echoed in When is Enough, Enough?

This completely contradicts the modern philosophy which states that you should keep refactoring your code until it is perfect. In the first place nobody can define what "perfect" actually means as it is a totally subjective concept. In the second place if you spend any amount of time on a program and when you have finished it does nothing more than what it did before you changed it, then that time has been totally wasted. You would have great difficulty in justifying this wasted time to your paymasters, especially when there are genuine problems which should have a far higher priority than a little bit of tinkering with something that ain't broke.

On more than one occasion someone has tried to point out where there is something wrong with my code which needs to be fixed, but on closer examination the "problem" doesn't actually exist (except in his tiny mind). In these cases any time spent on implementing a solution would be time wasted as it doesn't actually make the code run faster or more reliably. Amongst these so-called "errors" have been the following:

That name is in snake_case when it should be in camelCase.I asked him if he could read the name in its present form, to which he responded in the affirmative. I asked him if the name conveyed a proper meaning, to which he responded in the affirmative. I then told him quite bluntly: "If you can read it and understand it, then that is all that is required, so go away and stop wasting my time".

You are not using the right design patterns.This shows that the person does not understand how to use design patterns properly. I do not have to use a particular design pattern in order to prove that I don't have the problem which the pattern was designed to solve. My code is as simple as possible and has a a logical structure that is easy to follow and therefore easy to maintain, so I don't need to pad it out with unnecessary design patterns.

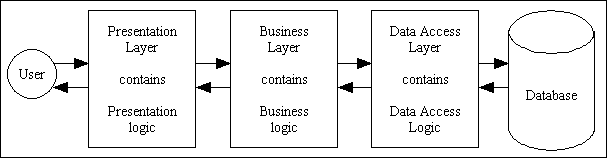

You are not using that design pattern properly.I pointed out to him that a design pattern merely describes a design and not a particular implementation, so any implementation which performs the objective of the design can never be described as wrong. I have often been told that my implementation of the Model-View-Controller pattern is wrong, or that my implementation of the Singleton pattern is wrong, but when I ask my critic to point out where my implementation deviates from the objectives of the pattern in question they are unable to provide an intelligent response. As far as I am concerned if my code already achieves the correct result, and changing my implementation to match theirs would achieve exactly the same result, then there would be absolutely no benefit in changing my implementation to match theirs.

You are polluting global namespace.Global variables have their use in every programming language, such as being able to access them without them being passed as arguments inside every method call. This is not to say that I would prefer using a global variable instead of a method argument, but there are times when you have a collection of variables which may be accessed and which would be too cumbersome to include in every method call. Like anything which can be used, they can also be over-used, mis-used or even ab-used. Some developers go to great lengths to avoid the use of any global variables, so they put them inside objects which still have to be accessed globally. How is a global object different from a global variable? How much code do you have to write so that you can access a variable through a global object instead of the standard global namespace? My use of global variables does not cause a problem (except in the minds of the purists, but their opinions do not matter to me) so as far as I am concerned their use does not need a solution.

On more than one occasion I have seen someone devise a particular solution to a particular problem, only for others to include that solution in their own programs even when they don't have the same problem. What is the justification for that? Do they think that it's a cure-all for an endless list of unconnected problems? Some programmers don't have a clue whether that have that particular problem or not, so they implement the solution just to be on the safe side. They simply do not realise that writing code that you don't need is nothing more than a waste of time and effort, and will confuse the next programmer down the line who may spend valuable time investigating a piece of code only to find out that it doesn't actually do anything useful, or that the same result could have been achieved with far less code.

I am often asked ridiculous questions such as:

Why aren't you using an auto-loader?to which I reply

Because I don't have the problem for which auto-loaders are the solution.

Why don't you use namespaces?to which I reply

Because I don't have the problem for which namespaces are the solution.

Why don't you use interfaces?to which I reply

Because they are not a solution to any sort of problem.

Why don't you ....?to which I reply

Because I don't have to.

When I turn the question around and ask Why are you using all these things?

the answer is usually along the lines of I have to adopt all the latest fads and fashions in order to keep up with everyone else

. This desire to follow certain practices just because other people do is a classic example of the Lemming Effect or the Bandwagon Effect. I am not a lemming, I am a maverick, a non-conformist.

It is usually the symptoms which are caused by a problem that are noticed first, and it may take a while for the real problem to be identified. Simply masking the symptoms so that they are less visible or cause less damage does not cure the problem at all. It will still fester and bubble and usually cause bigger and nastier symptoms to appear somewhere else. The best way to deal with a problem is not to have that problem in the first place. Too many of today's young programmers spend time reimplementing the same cure over and over again simply because they don't have the ability to identify and eliminate the underlying problem. If you go to your doctor and complain "When I bang my head against a brick wall I get a headache" and he prescribes headache tablets, then he is making a mistake by treating the symptoms of the problem instead of tackling its underlying cause. What if the medicine causes unpleasant side-effects? Will he prescribe more medicine which has its own set of side-effects? The correct answer is "Stop banging your head against the brick wall!" so why don't today's programmers follow the same pragmatic line of thought and prevent problems instead of dealing with the symptoms? For example, an Object Relational Mapper is seen as the cure for the problem of Object-Relational Impedance Mismatch, but for me the best solution would be to prevent the mismatch in the first place by ignoring OOD and sticking with the more reliable DB design. The Composite Reuse Principle (CRP) is seen as the cure when inheritance is over used, but surely it would be better not to over use inheritance in the first place.

Each journey has a start point and an end point. Journeys can be short or long depending on the distance, and the number of steps required is directly proportional to the distance. The most efficient journey is the one which takes the fewest number of steps, and the fewest number of steps can be calculated by measuring the distance along a straight line between the start and end points. That is a simple view, and in the real world there can be more complexities thrown into the mix. There can be obstacles in your way such as mountains, valleys, rivers, gorges, forests and swamps, but these obstacles may be offset by other resources being at your disposal, such as a bicycle, a car, a train, a boat or an aeroplane. This combination of obstacles and resources then changes the journey calculation from the smallest number of steps to the quickest time. Then you throw in the fact that each of these resources may come with a different cost, which then changes the calculation to the shortest time that you can afford. You then have to balance how much you are prepared to pay with how quick you want to be.

It is one thing to stand at the start point and see the target, the end point, in the distance. You can see the real obstacles in your path, and you know what resources are at your disposal, so you do your best to calculate the most cost-effective journey. Remember that the most cost-effective may not be the quickest available but the quickest that you can afford. It is one thing to deal with real obstacles, but is a whole new ball game when, after you've started your journey, you suddenly find out that you also have to deal with an array of artificial obstacles thrown up by the let's-make-it-more-complicated-than-it-really-is-just-to-prove-how-clever-we-are brigade. These new obstacles are artificial as they do not exist in the real world like mountains and rivers, but only as rules in the minds of those who consider themselves to be the intellectual elite, the paradigm police, the object taliban. You may know that you can ignore these artificial rules and still complete your journey and achieve a cost-effective result, but the paradigm police will continually chastise you for breaking their precious rules, for being a heretic. I consider myself to be experienced enough to know the difference between a real obstacle and an artificial rule, and I personally don't care about the opinions of the paradigm police, so if one of these artificial rules gets in my way I will exercise my rights as a free thinker and ignore it. This may upset the paradigm police, but the proof of the pudding is in the eating. It is not good enough to say "I have followed your rules, therefore the results must be OK". If the pudding tastes awful the fact that you followed the recipe to the letter will be irrelevant.

A facetious answer would be "If it was too easy then anybody could do it".

One of the big problems with OOP is that Nobody Agrees On What OO Is. If you ask a group of programmers for their definition of OOP you will get wildly different answers, some of which are documented in What OOP is not. They all seem to have forgotten the original and much simpler definition, which is:

Object Oriented Programming is programming which is oriented around objects, thus taking advantage of Encapsulation, Inheritance and Polymorphism to increase code reuse and decrease code maintenance.

Note that the use of static methods is not object-oriented programming for the simple reason that no classes are instantiated into objects.

One of advantages of OOP is supposed to be that:

OOP is easier to learn for those new to computer programming than previous approaches, and its approach is often simpler to develop and to maintain, lending itself to more direct analysis, coding, and understanding of complex situations and procedures than other programming methods.

After reading these descriptions and having experienced procedural programming for several decades I approached OOP with the assumption that:

Having built hundreds of database transactions in several languages, and having built frameworks in two of those languages, I set about building a new framework using the OO capabilities of PHP. I then began to publish my results on my personal website, and received nothing but abuse.

OOP is supposed to be easier to learn. I say supposed for the simple reason that, like many things in life, much was promised but little was delivered. Too many cowboys have hacked away at the original principles of OOP with the result that instead of Object Oriented Programming we have Abject Oriented Programming.

As a long-time practitioner of one of those "previous approaches" and "other programming methods" I therefore expect OOP to deliver programs which are easier to maintain due to them having more reusable code and therefore less code overall. Having written plenty of structured programs using the procedural paradigm I expect OOP to deliver programs with comparable, if not better structures. This is what I strove to achieve in my own work, but when I began to publish what I had done I received nothing but abuse, as documented in What is/is not considered to be good OO programming and In the world of OOP am I Hero or Heretic? The tone of all this criticism can be summarised in your approach is too simple:

If you have one class per database table you are relegating each class to being no more than a simple transport mechanism for moving data between the database and the user interface. It is supposed to be more complicated than that.

You are missing an important point - every user transaction starts life as being simple, with complications only added in afterwards as and when necessary. This is the basic pattern for every user transaction in every database application that has ever been built. Data moves between the User Interface (UI) and the database by passing through the business/domain layer where the business rules are processed. This is achieved with a mixture of boilerplate code which provides the transport mechanism and custom code which provides the business rules. All I have done is build on that pattern by placing the sharable boilerplate code in an abstract table class which is then inherited by every concrete table class. This has then allowed me to employ the Template Method Pattern so that all the non-standard customisable code can be placed in the relevant "hook" methods in each table's subclass. After using the framework to build a basic user transaction it can be run immediately to access the database, after which the developer can add business rules by modifying the relevant subclass.

Some developers still employ a technique which involves starting with the business rules and then plugging in the boilerplate code. My technique is the reverse - the framework provides the boilerplate code in an abstract table class after which the developer plugs in the business rules in the relevant "hook" methods within each concrete table class. Additional boilerplate code for each task (user transaction, or use case) is provided by the framework in the form of reusable page controllers.

I have been building database applications for several decades in several different languages, and in that time I have built thousands of programs. Every one of these, regardless of which business domain they are in, follows the same pattern in that they perform one or more CRUD operations on one or more database tables aided by a screen (which nowadays is HTML) on the client device. This part of the program's functionality, the moving of data between the client device and the database, is so similar that it can be provided using boilerplate code which can, in turn, be provided by the framework. Every complicated program starts off by being a simple program which can be expanded by adding business rules which cannot be covered by the framework. The standard code is provided by a series of Template Methods which are defined within an abstract table class. This then allows any business rules to be included in any table subclass simply by adding the necessary code into any of the predefined hook methods. The standard, basic functionality is provided by the framework while the complicated business rules are added by the programmer.

In my opinion one of the biggest causes of software which is overly complex has been the over emphasis of design patterns which, although a good idea in theory have turned into something completely different in practice. Young programmers don't just use patterns to a solve a genuine problem, they use them whether they have the problem or not in the hope that they will prevent any problems from ever appearing in the first place. So instead of using patterns intelligently they over-use them, mis-use them, and end up by ab-using them. What they fail to realise is that the abuse of design patterns actually causes a problem which the addition of even more design patterns will never solve. When Erich Gamma, one of the authors of the GoF book, heard about a programmer who had tried to put all 23 patterns into a single program he had this to say:

Trying to use all the patterns is a bad thing, because you will end up with synthetic designs - speculative designs that have flexibility that no one needs. These days software is too complex. We can't afford to speculate what else it should do. We need to really focus on what it needs.

If you want to see prime examples of how not to use design patterns please take a look at the following:

In this blog entry The Dark Side Of Software Development That No One Talks About John Sonmez wrote:

I originally started this blog because I was fed up with all the egos that were trying to make programming seem so much harder than it really is. My whole mission in life for the past few years has been to take things that other people are trying to make seem complex (so that they can appear smarter or superior) and instead make them simple.

Another reason why I think that the basic concepts of OOP have been polluted by ever more levels of complexity is that some people like to reinterpret the existing rules in completely perverse ways. This just goes to show that the original rules were not written precisely enough if they are open to so much mis-interpretation. If rewriting the existing rules is not bad enough there is another breed of developer who takes this perversity to new levels by inventing completely new rules which, instead of clarifying the situation, just add layers of fog and indirection. Take, for example, the idea that inheritance breaks encapsulation. The idea is that this produces tight coupling between the base class and the derived class, which simply goes to show that they do not have a clear idea of how to use inheritance properly to share code between classes. If I have an abstract/base class called A and a concrete/derived class called C it means that class C cannot exist on its own without without the shared code that exists within class A. The idea then that the contents of A should be kept hidden from C and treated as if they were separate entities makes a complete mockery of the whole idea of inheritance.

There are also developers out there who seem to be forever dreaming up new ideas to make the language more OO than it already is, or advocating the use of new procedures or toolsets to make the developer's life more complex than it already is. This is where all these optional add-ons originated. According to th3james all these ideas seem to follow a familiar lifecycle:

While it may be indicated that an idea has certain benefits in some circumstances there are too many developers out there who don't have the intelligence to work out if those circumstances exist or not in their current situation, so rather than thinking for themselves they apply that idea in all circumstances in an attempt to cover all the bases and make their asses fireproof. Unfortunately if you apply an idea indiscriminately and without thinking it is the without thinking aspect which is likely to cause problems in the future.

Some developers seem to think that any idiot can write simple code, and to prove their mental superiority they have to write code which is more complicated, more obfuscated, with more layers of indirection, something which only a genius like them can understand. I'm sorry, but if you write complicated code that only a genius can understand then your code is incapable of being read, understood and maintained by the average programmer. The true mark of genius is to write simple code that anyone can understand. To put it another way - The mark of genius is to achieve complex things in a simple manner, not to achieve simple things in a complex manner.

In his paper Protected Variation: The Importance of Being Closed (PDF) the author Craig Larman makes this interesting observation: